Whether we're talking about churn, retention or renewal rates, the fundamental aim is the same: keep the revenue you already have.

The gravity of this challenge can not be overstated: if you aren't able to improve your renewal rates to a sustainable level, your business will bleed out and die.

Okay, maybe that was a bit dramatic but you get the point: the stakes are high. Failing to improve renewal rates can result it:

Over the last 10 years, the proliferation of software solutions, business models, and pricing strategies has resulted in a plethora of new revenue retention metrics, so let's first align on our terminology. I am defining renewal rate as:

Renewal Rate = 100% - Lost ACV/(Lost ACV + Total Renewed ACV)

[ACV: Annual Contract Value]

Let's do an example where we have $5M ACV up for renewal in Q1-2017 and your team is able to successfully renew $4.6M of it, but alas $400k is lost to the churn monster:

Renewal Rate = 100% - Lost ACV/(Lost ACV + Total Renewed ACV)

Renewal Rate = 100% - $400k/($400k + $4.6M)

Renewal Rate = 100% - $400k/$5M

Renewal Rate = 100% - 8%

Renewal Rate = 92%

Easy enough, right? Good. Related to this, if you're interested in seeing all the different ways that SaaS companies report these metrics to the Street, I highly recommend bookmarking Pacific Crest's report: Public SaaS Company Disclosure Metrics for Retention and Renewal Rates.

It's become fashionable to report on "net" revenue metrics. "Net" metrics are created by simply combining two numbers, typically expansion and churn. The risk here is that expansion from existing accounts can mask a churn problem. Or as I like to say, "Nets can cover things up."

Therefore, "net" metrics are out of scope for this article as they increase the complexity of something simple: keeping the revenue you already have.

3 Ways to Improve Renewal Rates

"Screen the team." This strategy has to do with the rigor your company applies to screening customer teams during the sales process. Given the hundreds of software options in the crowded cloud, the real battles aren't being fought over technology, but rather program resources. Similar to organizing an effective sports team, your Sales Engineers and Account Executives must evaluate:

Does this potential customer have the right players on the field?

If not, how do we make a business case for additional resources whether that be internal or external agency help?

Do they have access to developer resources? If so, how many hours per week? How many sprints per release? How many stories per epic? Specificity is key here.

Who is going to own the day-to-day adoption and evolution of the program to use your software, i.e. who is the program manager?

Does the potential customer have strong executive sponsorship and a desire to make this work?

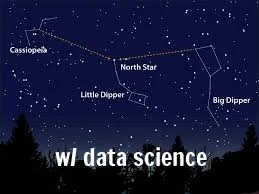

"ROC your renewals." If you aren't familiar with ROC curves, now is a good time to start. The goal of this strategy is to perform data science analysis to identify the 1-2 customer attributes and/or behaviors that are highly correlated with retention success, e.g. renewal rates, and then mobilizing your entire company—marketing, sales, customer success, design, engineering, everyone—to prioritize and improve these metrics:

"Pay the retention piper." Incentives matter. No matter how inspirational your company or product vision is, the behavior of your employees are primarily driven by how they are compensated. If your Account Executives are comp'd 100% on growth, they will spend 100% of their time closing new business (and 0% on retention). If your Customer Success Managers are comp'd 100% on usage metrics, they will spend the vast majority of their time on improving usage (and little time on lead generation, customer references, etc).